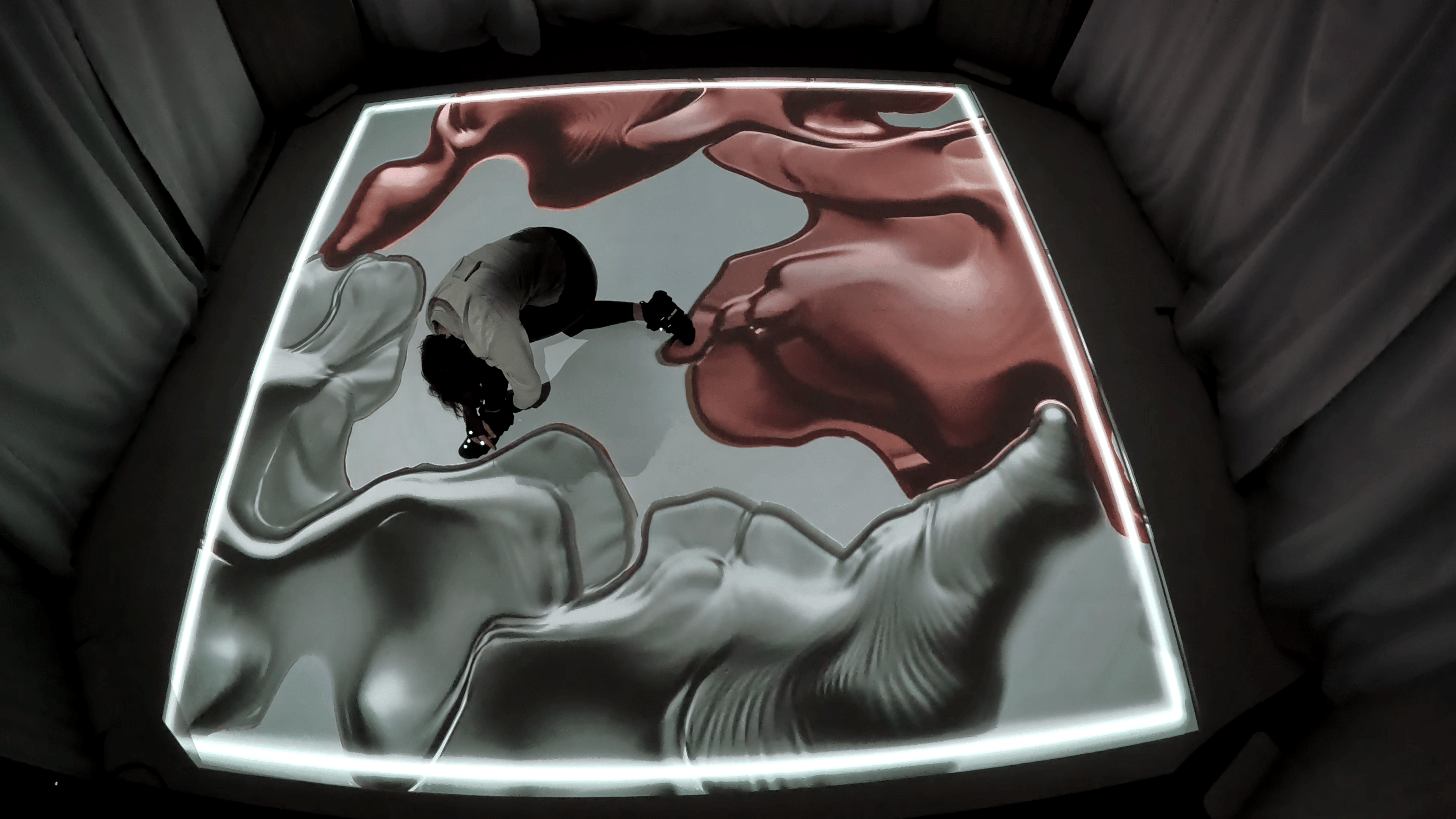

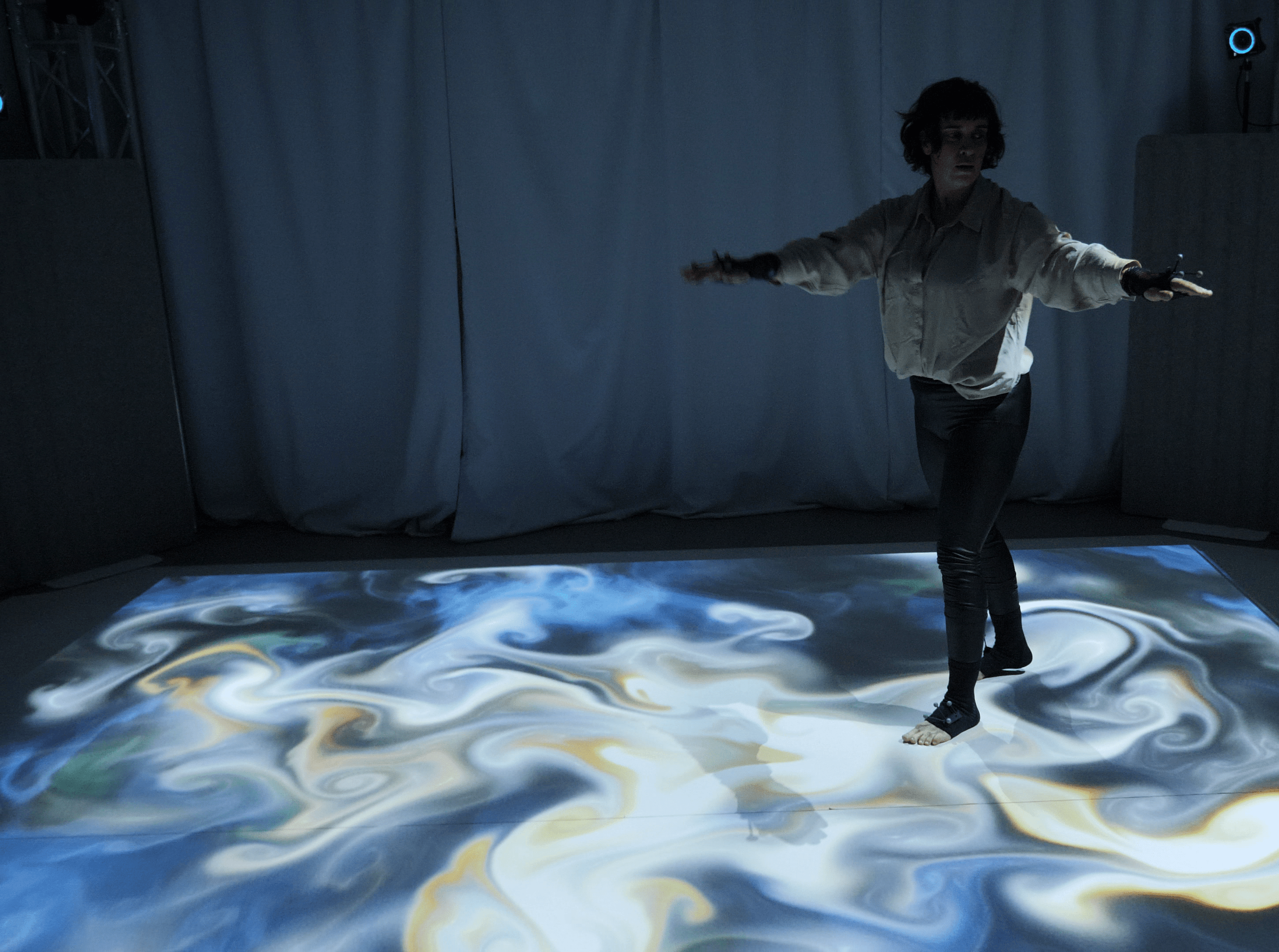

Traces of Motion

Can we elevate human motion through technology? How does movement leave its mark on us and our environment? We use interactive light projection to visualize the hidden paths we walk elevating human motion into an audiovisual piece.

Mixed Realities

We mix physical reality with computer-assisted real-time visualizations. The result is a new cross-boundary arena where real and digital coexist and interact.

Three Worlds

Through three distinct visual worlds and choreographies, we invite viewers to reimagine what augmented performance can be, extending our bodies with light, geometry, order, and chaos.

Technology

Our system tracks human motion with exceptional speed, dynamically adapting visual patterns based on velocity, distance, height, angle, and more. This creates an embodied language that artists can learn and exploit for self-expression.